Background

We've never had more answers at our fingertips: for any question you might think up, a quick query to your favorite search engine can very often give you a nice "Answer Box" with a quote from Wikipedia or another site that directly answers that question. And today, language models can give these answers as part of a seemingly naturalistic chat experience. However, these sometimes jaw-dropping systems can threaten their own future success, because of the "paradox of reuse". In a talk at the 2015 ICWSM conference, Dario Taraborelli introduced the paradox of reuse. McMahon et al. summarized this paradox in a 2017 paper as such: "Wikipedia and other Wikimedia sites (e.g., Wikidata) power these increasingly powerful technologies, which in turn reduce the need to visit Wikipedia". This line of work went on to heavily shape my research as a doctoral student, inspiring me to look into questions around Wikipedia's value to other online platforms, to search engines, and to begin to think about ways to address these issues. The recent introduction of the new Wikimedia Enterprise program is likely in part a response to the research and discussion in this area.

The paradox of reuse was never definitively "solved", but steps were taken to address it. For instance, major search engines like Google, Bing, and DuckDuckGo do appear to be frequently providing links to the Wikipedia platform so there is at least a possibility for search users to visit the site. While the impacts of Wikimedia Enterprise remain mostly speculative, this program could lead to more symbiotic relationships.

Language Models Revivify Paradox of Reuse Concerns

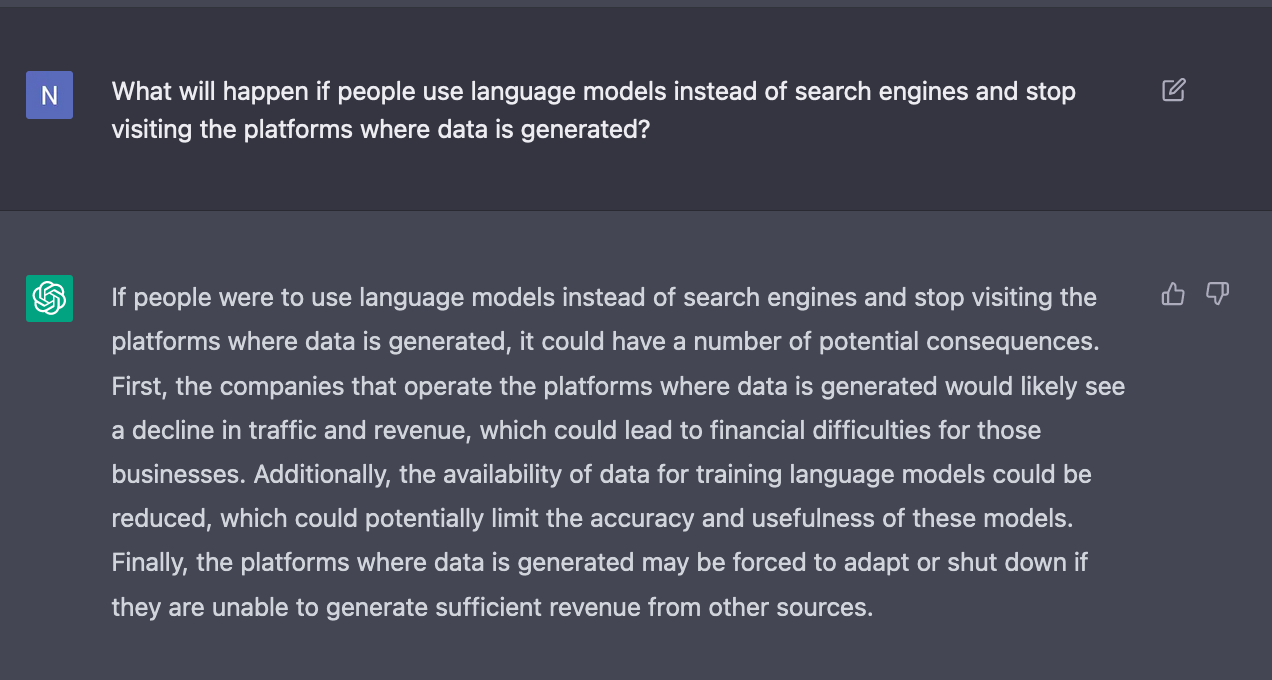

However, with the advent of language models (which seem to be getting a new jaw-dropping release on a weekly basis), this paradox is rearing its head once again, with even higher stakes. In response to ChatGPT released on Nov. 30, 2022, there have been claims on Twitter that these systems will replace search engines (which stands in stark contrast to concerns recently raised in an MIT Technology Review piece). In an article in The Atlantic discussing the high stakes of the rise of AI systems such as the start-up Consensus (which, in contrast to ChatGPT, does cite specific papers) and OpenAI's GPT-3, Derek Thompson introduced the term "answer engines". The answer engine term succinctly captures the idea that ChatGPT (or Consensus, or GPT-4...) might supplant Google and Bing. Personally, I was very skeptical of the perspective that language models could represent an alternative to search engines until I spent a good deal of time interacting with ChatGPT myself. After querying the system on a variety of topics -- ranging from questions about my own research and the underlying training data (ChatGPT is designed to avoid answering such questions) to questions about biology and medicine -- I quickly came to understand how others arrived at this conclusion.

Without a doubt, these new systems are enormously exciting and potentially transformative. But there's a problem: if web users seriously shift their use of search engines to answer engines like ChatGPT, we're going to see the paradox of reuse return in an even more extreme form. Users will see fewer links, and the answers themselves will be downstream of content from even more platforms. It's not just Wikipedia that's powering these models (though Wikipedia certainly plays a disproportionate role); it's also StackExchange sites, Reddit communities, other forums, academic literature, and more. It's very important to the sustainability of platforms like StackExchange and Reddit that people actually visit the sites.

Thus, the paradox of reuse (language model edition) may create a serious crisis of sustainability. Put dramatically, we might say these are machines drilling away at their own foundations. Lots of past computing advances rely on "the sum of all human knowledge" (i.e., Wikipedia and friends); these models rely on the "the sum of all (public) online human activity". If the models prevent people from visiting the very platforms where this activity used to take place, there will be no dataset for the next model.

We know that the number of people who visit sites like Wikipedia and StackExchange is orders of magnitude greater than the number of people who submit content to these sites (back in 2011, a back of the napkin estimate from Benjamin Mako Hill was that 99.98% of readers never edit). However, even if only a small fraction of readers edit/contribute, reducing visitors to a given platform seems very likely to also reduce contributions to that platform. Put simply, if in the past 100 users search Google to answer a programming question and 1 of them becomes a StackExchange contributor, the rise of "answer engines" could reduce this number to zero.

Potential Responses

What can we do about this? One direction is to continue researching language models that "cite their sources" (essentially, a training data attribution task). This could allow us to simultaneously enjoy the advantages of language models over search engines, while retaining the basic advantages of accessing links to primary sources. Another option is to build feedback loops into these systems to make it more likely that language model users who might want to contribute to online platforms can do so, ideally in a transparent and symbiotic manner. As a first stage response, systems like ChatGPT could prompt frequent users (or all users) to consider checking out the platforms that comprise much of the training data. This might feel heavy-handed, and whether is would have any impact is worthy of empirical investigation, but it would likely be better than a future in which answer engines operate with no pointers to the platforms that scaffold the creation their upstream data. As another approach, profits from language models could be earmarked to support the platforms where data creation takes place. OpenAI is collaborating with Shutterstock to pay artists from their contributions to AI art models, and a similar approach could be used at the online community level. While these economic incentives are ill-suited for facilitating individual contributions (it's against Wikipedia policy to pay for edits, and very much opposed to the spirit of peer production), allocating money at the collective level (for instance, to support the development of new tools for Reddit users) could be a direction to explore.

Acknowledgments

Thanks to Hanlin Li and Aaron Shaw for their comments on an early draft. This post was written in response to a rapidly developing discourse, so I hope to update in response to feedback, criticism, and new developments!

Comments?

You can also read this article via a Notion public link.